At this year’s World Economic Forum in Davos, Marc Benioff, CEO of Salesforce, said in an interview that he believed his generation of CEOs would be the last to manage “exclusively human workforces”. He envisioned hybrid human-agent workforces.

That framing stuck with me. During keynotes throughout the year, I talked about what a hybrid marketing team of the future could look like. I showed a slide with an org chart of humans and agents. And every time, this was the slide that created the strongest reaction. It’s a divisive idea; some people loved it, others found it unrealistic, and a few found it downright terrifying. But it always sparked follow-up conversations.

After one of those sessions, we talked within our team. What if we tried to build our own hybrid team? If we all learned to build agents and “hired” one into our team? So in Q3, we set out to do exactly that.

How we structured the project

We’re lucky to have a couple of AI champions within our team who have been experimenting with AI both at work and for personal projects (check out Sam’s review of Cursor AI here https://prismic.io/blog/cursor-ai, Angelo’s notes on how Claude Code changed his mind on AI development here https://prismic.io/blog/claude-code, or Lidija & Sam’s experiment with real-time website adaptation here https://prismic.io/blog/conversational-websites-personalization).

Sam took the lead on this project as our “Agent Lab” champion and helped us structure it for success:

- He set up a dedicated Slack channel for the project. We’re a distributed team working across continents and time zones, so this was very helpful to keep comms flowing.

- Within that channel, he built a resources tab with shared learning resources around agents and the tools we were going to build out agents with.

- We held a kickoff session to align on goals, share early ideas, and discuss how we would experiment and collaborate. We made two key decisions here:

- We included our AI agents in our official goals for the quarter. We knew it would be hard to carve out time for learning in a busy quarter, so we formally made it part of our goals that our bonuses partially depend on. This ensured that we would prioritize our agents.

- After discussing several options, we picked n8n as our main automation platform. It was flexible enough for most of our agent plans, and non-technical enough for those of us without any technical background to be able to build with it (other options we looked at were Zapier, Make, and Langchain - which we have since started using for other projects as well).

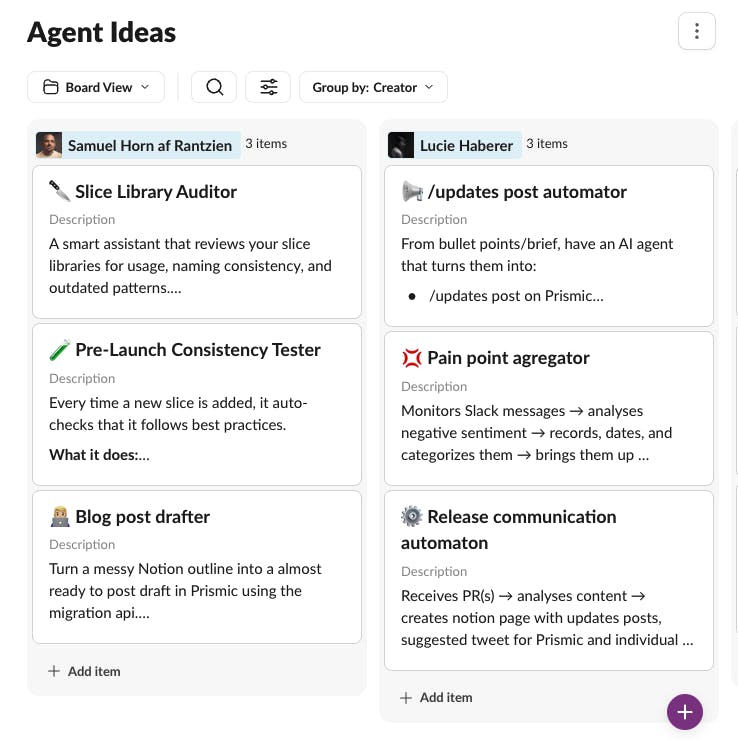

- Over a couple of weeks, we brainstormed and built out a database of agent ideas in a Slack board. Having this time allowed us to keep adding ideas throughout the week as we came across something that was potentially automatable.

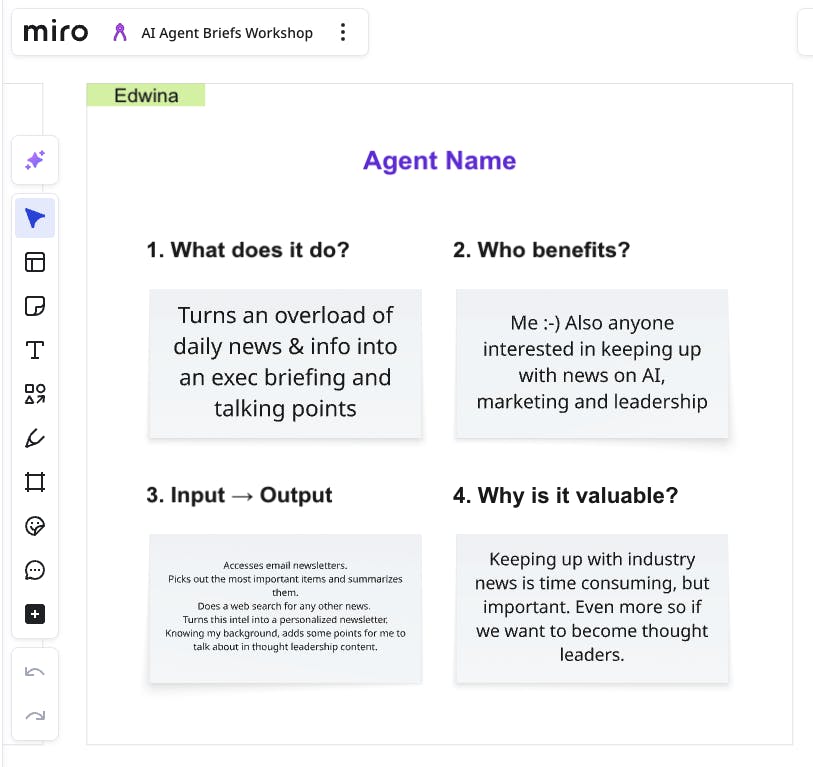

- We then did a live workshop to pitch our ideas to the team, vote on them, and create agent briefs for the most promising ideas. We used a Miro board to do this - post-it walls will of course work as well if you’ve got the opportunity to work together in person!

- From there, we started building! To stay aligned and keep progress going, we scheduled a weekly “Agent Lab” team meeting as a check-in, where we demoed our MVPs, shared progress, and discussed roadblocks.

- Sam also offered 1:1 building jams, where we spent an hour actively working on our agents together. This was particularly helpful for those of us with no technical background, and helped us understand best practices on topics like structuring our agents, building memory in, getting to grips with JSON, or simply ensuring that the agents did what we expected.

- Throughout the building phase, we continued sharing learnings, ideas, and resources in Slack. This led to valuable conversations about the complexity of agents, when we really needed agents versus more straightforward “AI automations”, and we worked through technical issues with n8n and the many tools we started connecting to it.

First time here? Discover what Prismic can do!

👋 Meet Prismic, your solution for creating performant websites! Developers, build with your preferred tech stack and deliver a visual page builder to marketers so they can quickly create on-brand pages independently!

What we actually built

Here’s just a couple of examples of agents we built in our Lab:

Executive Briefing Agent

I’ve been struggling to keep up with AI news. There’s a new tool or major discovery announced almost daily. So I signed up for a bunch of newsletters, which - big surprise - sat in my Inbox unread. My goal was to consolidate all that news into a single, personalized newsletter.

I built an agent that reviews the newsletters I’m subscribed to, conducts additional web searches, and creates a custom, personalized newsletter for me. My agent has access to my personal profile, knows I work for Prismic, and understands my work, so they can make decisions about what’s relevant to me. It also suggests talking points for thought leadership content (which, I’ll be honest, I have yet to find time to act on).

Sam's agent:

“I wanted to test how feasible it would be to use n8n as the backend for an entire app. So I built an AI-driven content workflow with three coordinated n8n agents, all originally backed by Notion as the database and UI layer.

Here’s what the three workflows did (simplified):

- Idea Generator — Runs daily → pulls inputs (blogs, subreddits, X accounts) → builds a pool of fresh content → feeds it to an AI agent → generates new post ideas → stores them in Notion.

- Post Drafter — Triggered from the app for a specific idea → an AI research agent gathers context using web search → a drafting agent writes a post in my tone → if there’s code, another agent generates a styled code-image → the draft is saved back to Notion.

- X Poster — Runs daily → checks drafts marked “ready to publish” → downloads media → posts to X.

Everything worked, and Notion served as a surprisingly flexible “UI layer” with changeable statuses and buttons as triggers. But as the system grew, managing logic inside Notion became messy.

So I built a Next.js app with a clean interface for ideas, drafts, and scheduling. n8n still handled the backend logic, but Notion became the bottleneck, so I replaced it with Convex, which fits this kind of structured, reactive workflow much better.

Now I’ve realized…

After switching to Next.js + Convex for the interface and database, it probably makes sense to also move the n8n workflow logic into the same stack. Keeping everything within a single system would simplify the architecture, reduce moving parts, and make the whole app easier to evolve.

And while n8n is fantastic for getting ideas running quickly, with almost no coding knowledge and the ability to build quite advanced automation, if you can code and your end goal is a production-ready app, it’s usually better to use N8N as a prototype tool, and to build on a more robust, unified foundation from the start.”

Lucie’s agent:

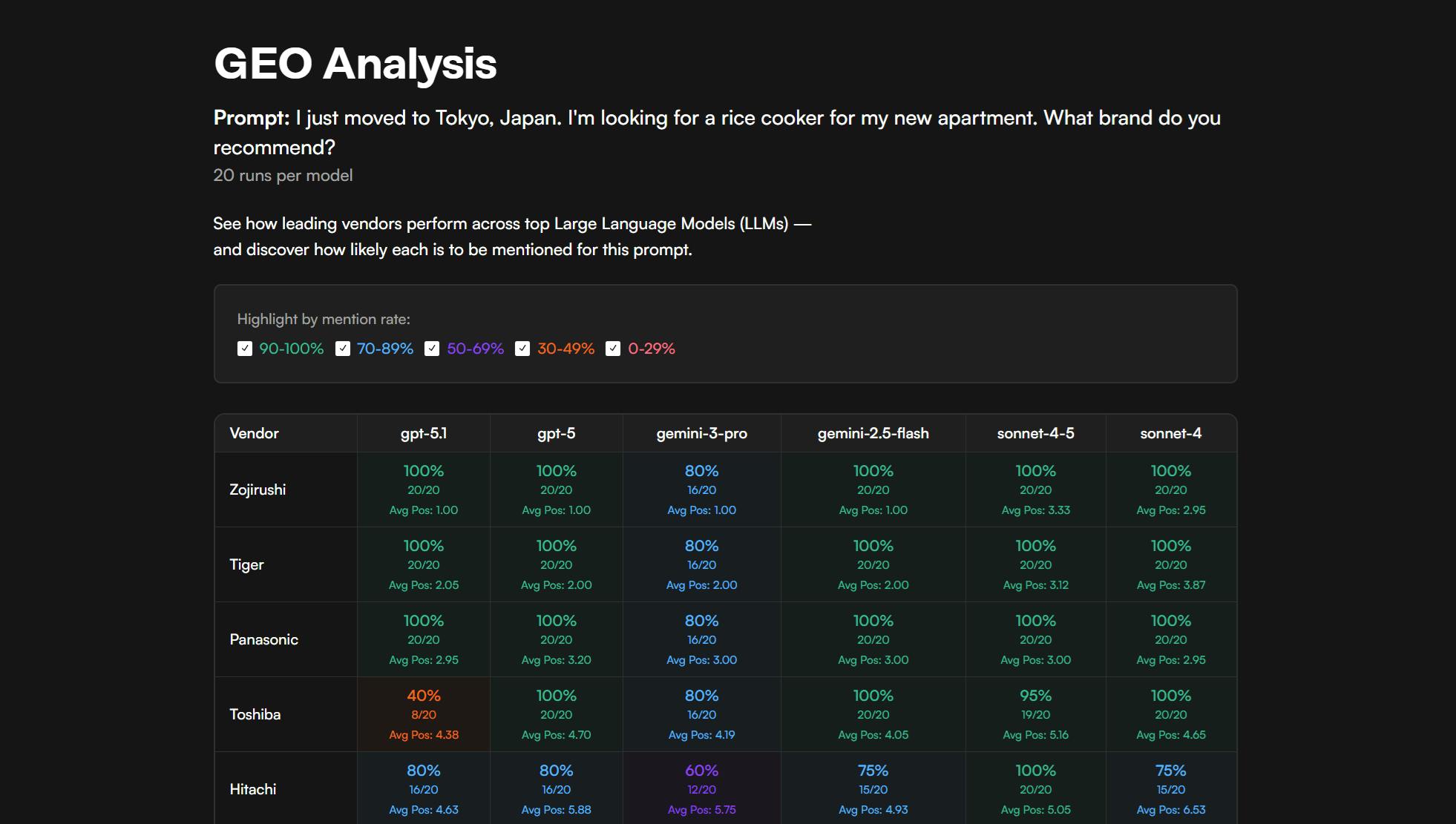

“Whenever a new Large Language Model (LLM) gets released, first, we hear it’s the best model yet, and then we start debating how it actually performs on benchmarks. Inspired by those discussions, I wanted to build a benchmarking agent of my own, but what should it benchmark?

Nowadays, we do most of our searches through ChatGPT and other AI chats. So I decided my agent should benchmark how likely various models are to recommend Prismic to prompts like “What CMS should I use for a Next.js site?” The resulting data would then give us insight into how we compare to other CMSs.

So, how do we benchmark language models?

It’s a bit like running a poll! Except that instead of asking a different person every time, you keep asking GPT 5.1 or Gemini 3 about what CMS they would recommend, collect their answers, and then you start having a picture of how you’re ranking for various queries. I could have built such an agent in n8n, but as an engineer, I took that as the opportunity to try Vercel’s AI SDK and implement it directly in code.

Once I had this “polling agent” built, as LLMs’ answers aren’t set in stone (they can do searches, think, get tweaked, etc.), I tasked it to run polls weekly and to report on Slack. This allowed us to track the evolution of our ranking over time and test whether specific initiatives have a measurable impact.

What started as a small experiment quickly caught some attention internally. I extended the agent to support on-demand polls for arbitrary prompts, including some less work-related ones (and yes, I did end up buying a Tiger). Over time, it evolved into a fully adopted internal tool, now used by many Prismicans.

What we learned

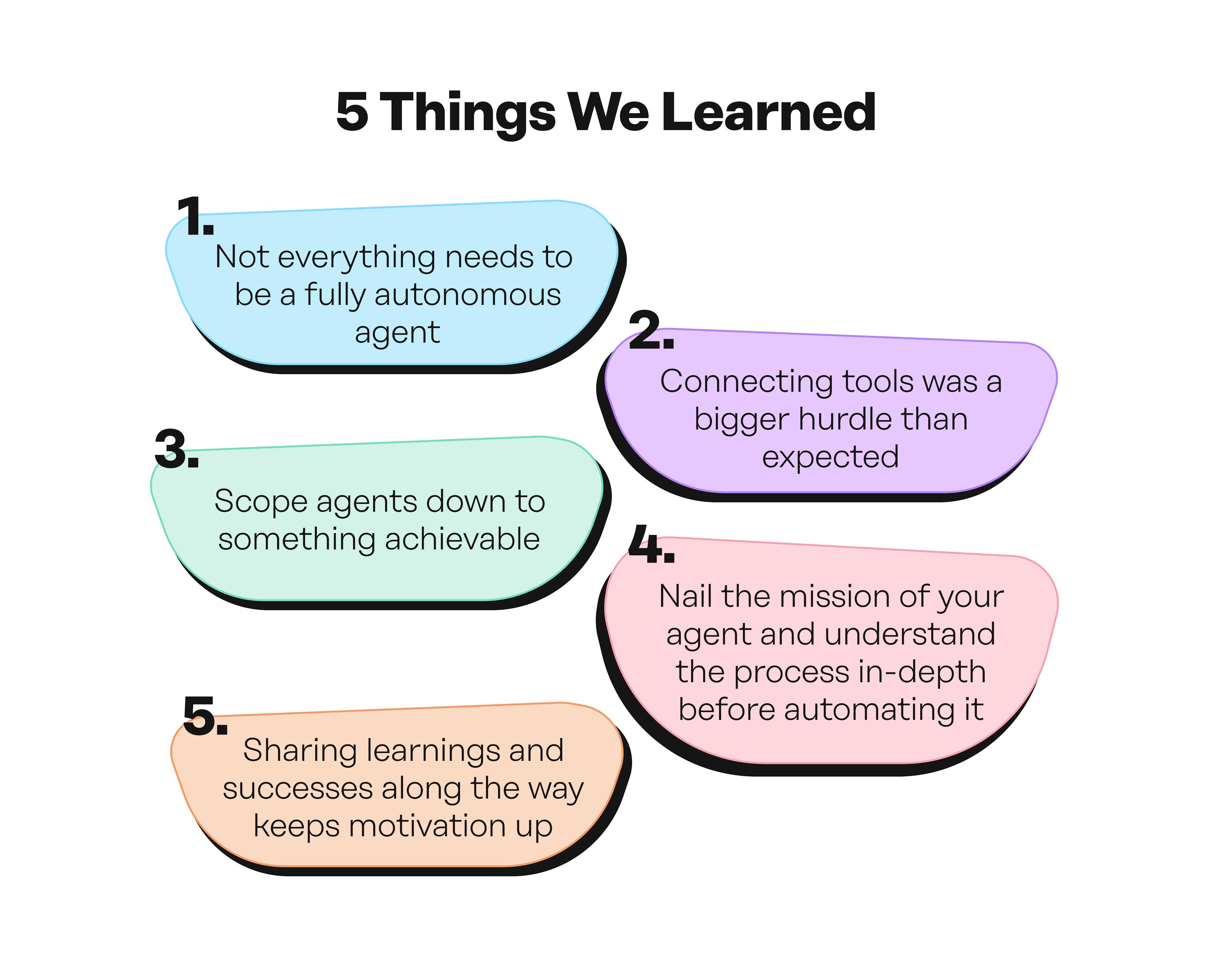

1. Not everything needs to be a fully autonomous agent

Because we set out to build AGENTS, we sometimes tried to make solutions agentic when that wasn’t strictly necessary, and a mostly linear workflow with a very focused AI agent solving a small part of the workflow would have been a better solution.

One of Angelo’s learnings from this: “For example, fetching lines of code changed in our products is best done with a rigid, repeatable set of steps. Summarizing those changes and assessing the impact on our documentation, however, is best done with a smart agent. Sequencing the rigid and agentic steps into a single flow proved to be a working combination.”

2. Connecting tools was a bigger hurdle than expected

A big part of what we wanted to do was to automate workflows across multiple tools. We ran into blockers multiple times while trying to connect those tools. What we thought would be a simple “enter API key and be done” often turned into a longer challenge with errors along the way.

We ran out of tokens (I’m personally to blame for blowing a month’s worth of token budget on a single afternoon of testing), hit quota limits, and produced some weird documents that we never meant to create.

This slowed our progress, and we spent a lot of time on “one-off” fixes at the beginning. In hindsight, I would focus more on setting up the core infrastructure before having the entire team start building. But on the bright side, we now all know the limitations and can work on the setup for personal projects as well.

3. Scoping agents down to something achievable

We had some grand ideas. But quickly found out that 1) we weren’t going to be able to get those done within the one-quarter timeframe we had set for ourselves, and 2) the more complexity we tried to build into one agent, the worse the results got. So a big part of our early planning and experimentation was about figuring out what’s realistic.

This also ties back into point one about simple sometimes being better. Once we focused on building MVPs and getting them to run error-free, we found our rhythm. We had more success building iteratively, making something work, and then adding complexity step by step.

4. Nail the mission of your agent and understand the process in-depth before automating it From Lidija: “Having a clear process mapped out before starting to build an agent is the right way to get going. I tried building a fully complex competitor-research agent, but realized I hadn’t nailed the competitive-research process myself first. It’s similar to wanting to hire someone junior for something you have no idea how to do yourself. Just hiring someone won’t fix that problem, because they will need a lot of oversight to execute the work, and if you don’t know what needs to be executed, you are not going to be successful.

That is why, at the end of the quarter, I ended up with the Competitor Intelligence Intern, an agent whose scope was limited to researching one very specific part of competitive analysis and producing scoped answers within my Notion database. Nothing too complex, but when a new name pops up in the industry, I at least have automation that gives me an initial glimpse into who they are and what they do, which helps me prioritize further research more effectively.”

5. Sharing learnings and successes along the way keeps motivation up

Most of us experienced waves of excitement, a bit of frustration, and rollercoaster ups and downs in how our agents performed. The dedicated Slack channel and weekly meetings were great ways to share progress, learnings, successes, and occasional failures.

What happened with our agents after the project ended

Full transparency: not all our agents passed their “probation period”. Some were more of a learning exercise than truly useful team mates - because we overcomplicated them, or because we got drawn into building something that COULD be automated, not necessarily something that we really NEEDED to automate. As Peter Drucker said, “Efficiency is doing things right. Effectiveness is doing the right thing”.

We did get the effectiveness right with some of our agents, though. Lucie’s LLM tracker, which we used to track our own visibility on key topics across various LLMs, caught the attention of our sales team. It’s since evolved into a company-wide agent that our teams use to prepare prospect and client meetings for our GEO landing page builder solution.

Where we go from here

We’ll continue evolving our existing agents, and this project has given us the conviction to build agents and custom GPTs for our daily work. Even without running a formal agent project this quarter, we have continued to build agentic and non-agentic AI solutions.