No matter how powerful AI tools like ChatGPT are, the quality of their output depends on how clearly and effectively you give them instructions. You may get poor results if you don’t know how to guide the AI model.

That’s where prompt engineering comes in.

Prompt engineering is the skill of communicating with large language models (LLMs) in a way that gets you the best possible outcome for your specific task, whether that’s writing, coding, summarizing, or anything else. Learning to prompt well is how you take full advantage of what these tools can do.

In this guide, you’ll learn what prompt engineering is, how it works, and how to apply it effectively across different use cases.

What is prompt engineering?

Prompt engineering is the process of writing effective inputs, or "prompts," that guide an AI model to give the output you want. It’s how you communicate with tools like ChatGPT to perform various tasks.

Think of it like giving instructions to a very smart assistant. The better you explain what you want, the better the response you’ll get. That could mean being more specific, using the right format, or providing examples the LLM can follow.

Why prompt engineering matters

AI models don’t work like humans. This means they don’t guess or assume what you mean. Instead, they respond to exactly what you ask. That means your results depend heavily on how you structure your prompt.

Without prompt engineering, you may get vague, inaccurate, or inconsistent outputs. But with well-crafted prompts, you can control the tone, structure, length, etc, of the AI’s response.

Proper prompting matters for several reasons:

- It helps you get clearer, more accurate results from the LLM.

- It saves time by reducing the need to rewrite or re-ask the same thing in different ways.

- It gives you more control over the format and style of the output.

- It’s essential when building or integrating AI into your apps, workflows, or products.

In short, the better your prompt, the better your outcome.

Anatomy of a good prompt — with examples

Now that we’ve covered what prompt engineering is and why it matters, let’s look at what actually makes a prompt “good.”

Most effective prompts share a few core elements:

Instructions

Instructions are the core task and the heart of your prompt. They tell the LLM exactly what action to take.

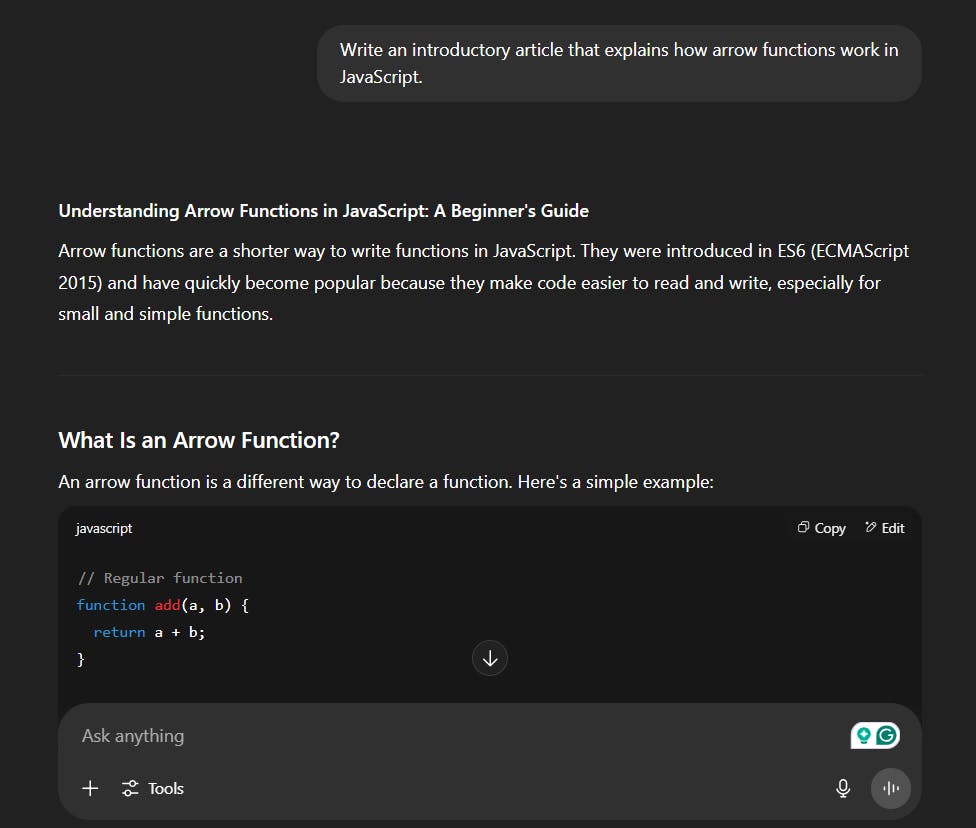

Say we intend to write an article about JavaScript arrow functions. The instruction could be: “Write an introductory article that explains how arrow functions work in JavaScript.”

Context

Instructions are a great starting point. However, you can further tailor the prompt by enriching it with the proper context.

Context is king when it comes to working with AI tools. The more relevant background information you provide, the better the model can understand your goal and generate output that matches your expectations.

Take the JavaScript article for example. Instead of leaving the AI to guess, the right context could provide useful data like:

- Who the audience is (e.g., beginners, intermediate devs, or senior engineers)

- What the content is for (e.g., blog post, technical documentation, course material)

- What the reader is expected to know already (e.g., basic JavaScript but not arrow functions)

- The desired tone (e.g., casual, formal, instructional)

- The platform or brand (e.g., writing for a coding bootcamp vs a developer blog)

Adding even one or two of these points can help the model tailor its language, depth, and structure to better fit your use case.

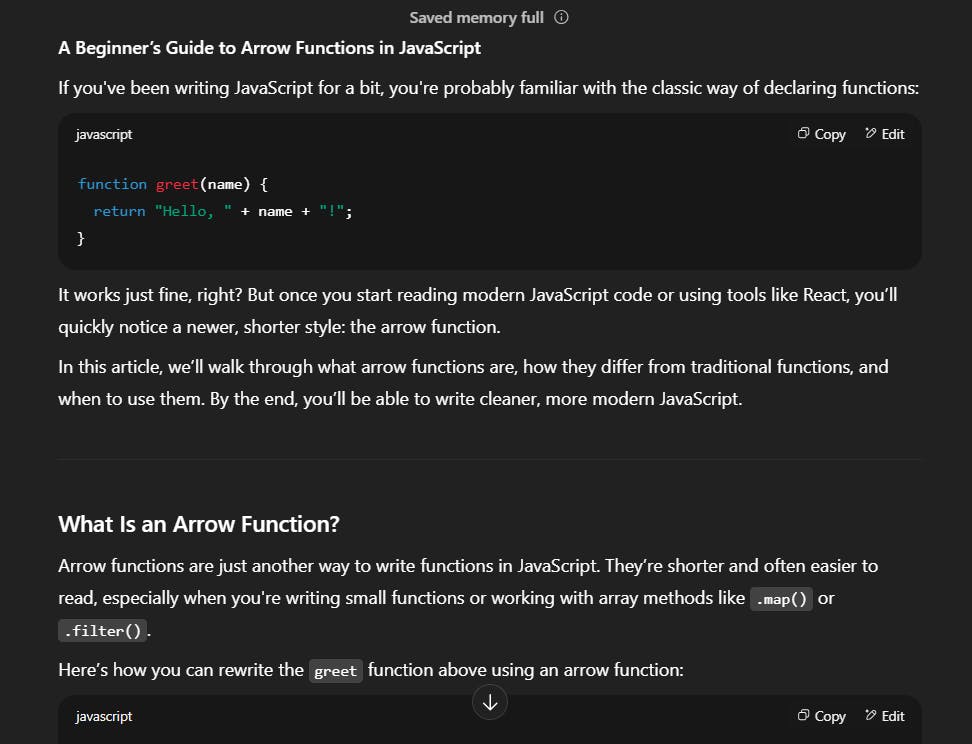

Now, let’s refine the earlier prompt by making it more specific: “Write an introductory article that explains how arrow functions work in JavaScript. The target audience is beginner web developers who are familiar with traditional function syntax but new to arrow functions. The article will be published on a programming blog.”

The moment we do that, the output changes.

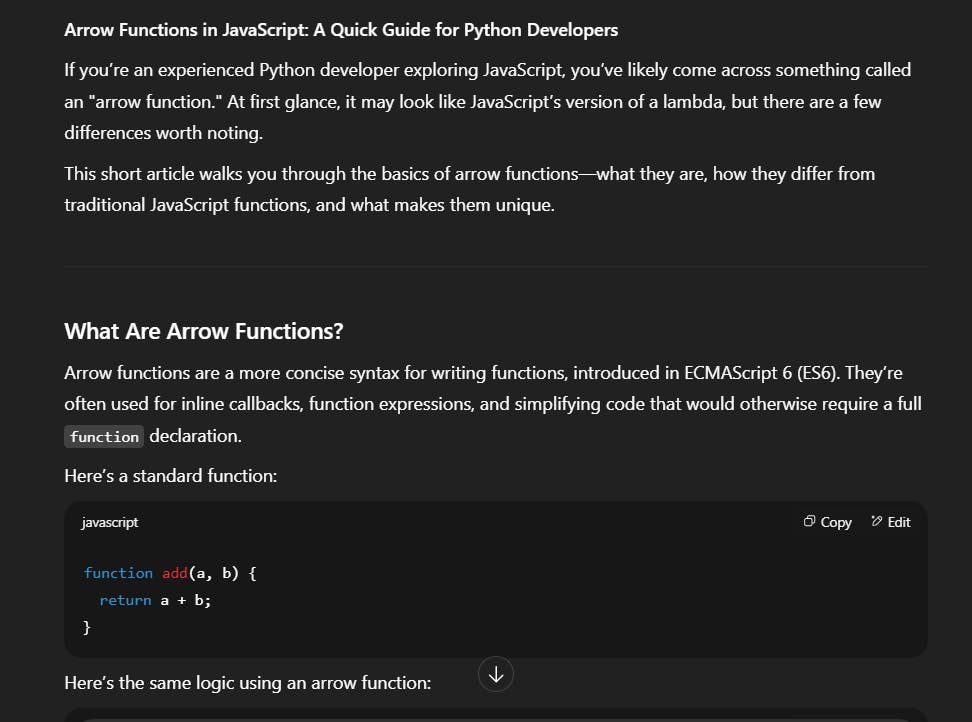

If we change the audience to “expert software engineers with experience in the Python programming language,” we get a different result.

As you can see, the right context transforms a basic prompt into a much more focused and useful one. We could continue tweaking the input with extra context, which would affect the AI’s output.

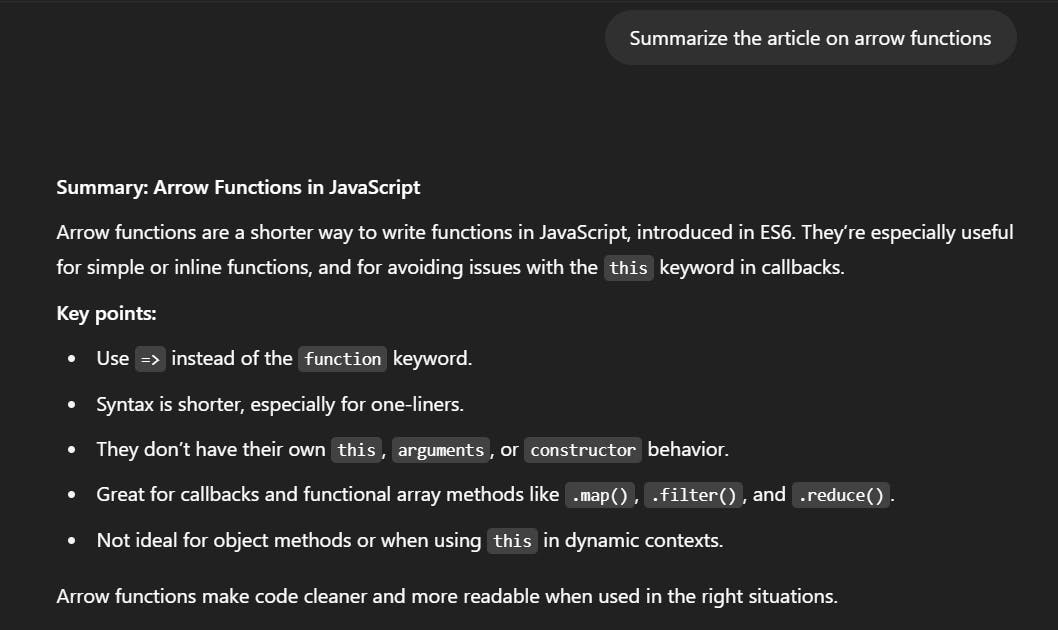

Format

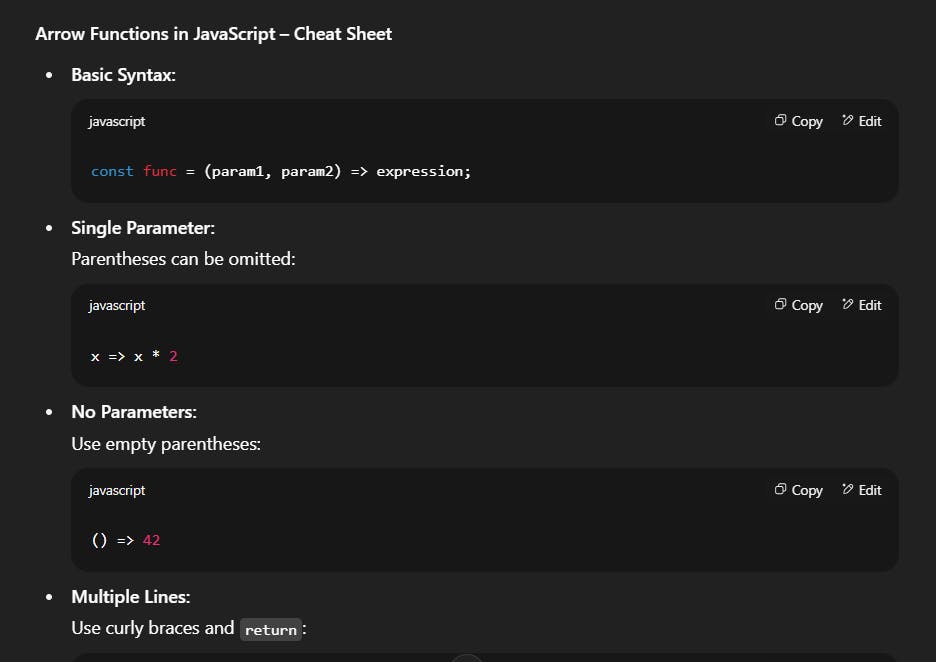

Another key thing is the format. This tells the LLM how to structure its response, whether that’s a list, table, paragraph, tweet, JSON object, or something else. Without formatting directions, the output might not match how you plan to use it.

Suppose we change our mind and no longer want the info on arrow functions to be an article. Maybe we want to present it as a quick reference list for a cheat sheet or learning resource: “Explain how arrow functions work in JavaScript using a bullet-point list. Keep each point short and clear so it can be used in a cheat sheet.”

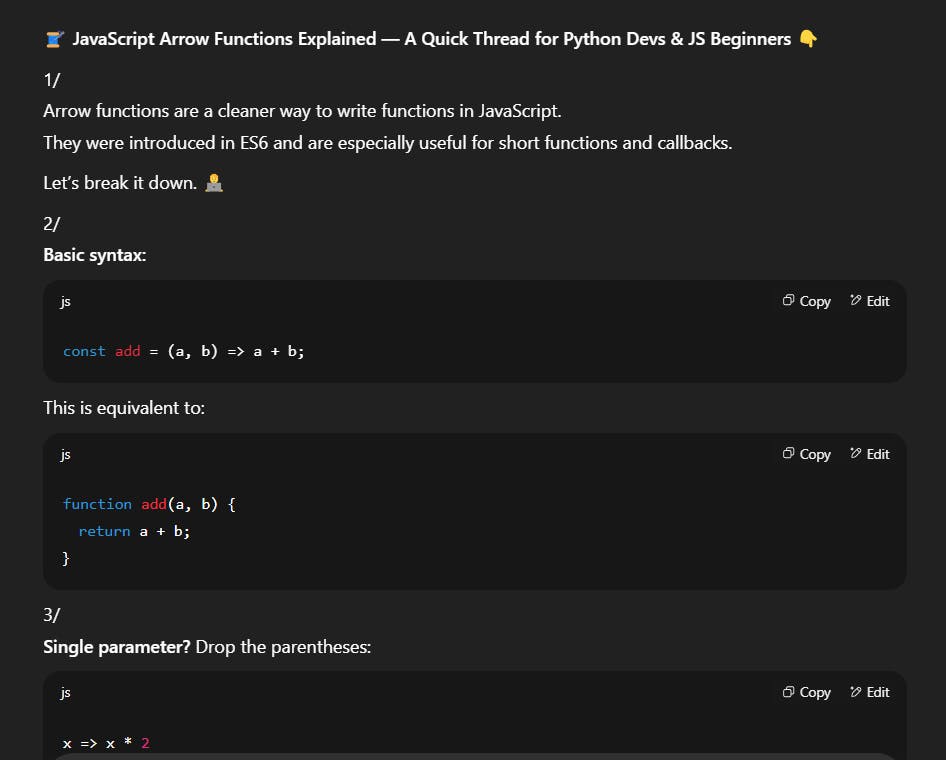

We could also turn it into an X (Twitter) Thread.

By defining the format, you help the model organize its output in a way that’s more useful for your

Constraints

AI tools can still go off the rails, even with clear instructions, context, and format. That’s where constraints come in.

Constraints are rules that shape the final output. They might limit length, keywords, or even things not to include. Constraints help make the output usable and consistent.

Possible constraints for the JavaScript content could include:

- Keep the explanation under 300 words to maintain brevity.

- Don’t use em dashes.

- Exclude any mention of frameworks like React or Vue to keep the scope focused.

- Avoid technical jargon where possible to keep the explanation simple.

Constraints are used across many disciplines. For example, developers who use AI tools to vibe code often have a list of guidelines that shape the kind of output the AI generates.

Prompt engineering techniques

Prompt engineering isn't just about writing instructions. It's about writing them strategically. There are techniques you can use to enhance clarity, control the output, and consistently get better results from AI tools.

Zero-shot prompting

Zero-shot prompting is when you ask the model to perform a task without giving it any examples. Here, you rely entirely on the model’s pretraining to understand the task based on the phrasing of your instruction.

Use zero-shot when your task is clear and doesn’t require a specific format or tone, like definitions or explanations, summarization, classification, simple question answering, and language translation.

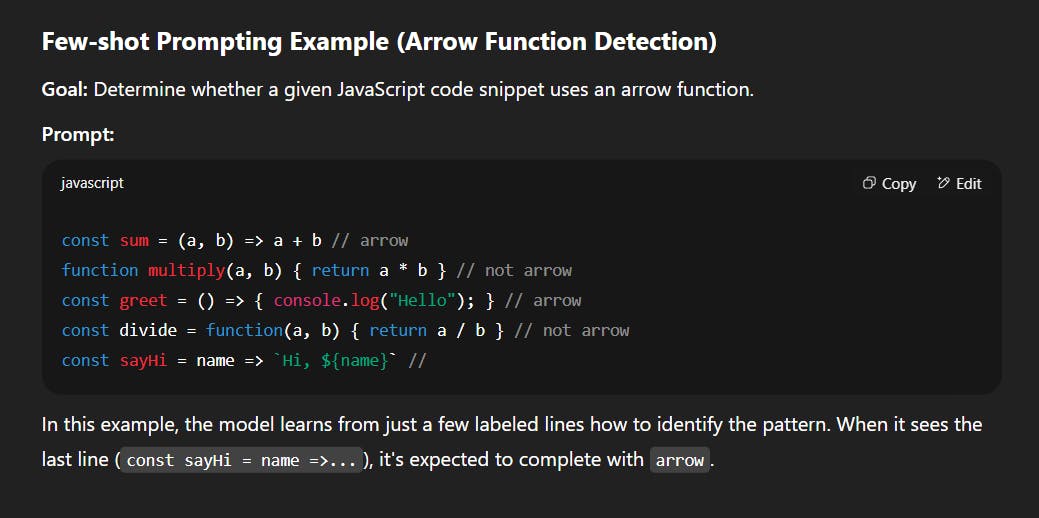

One-shot and few-shot prompting

These techniques involve providing one (one-shot) or a few (few-shot) examples along with your prompt to help the LLM understand the desired pattern or format.

Examples are great because they help guide the model, especially for less common tasks or where specific structures or formats matter.

For example, say we want to create a prompt that checks whether a given JavaScript function is written using arrow function syntax. To achieve this, we can provide some examples (few-shot) to teach the model exactly how to classify the snippets.

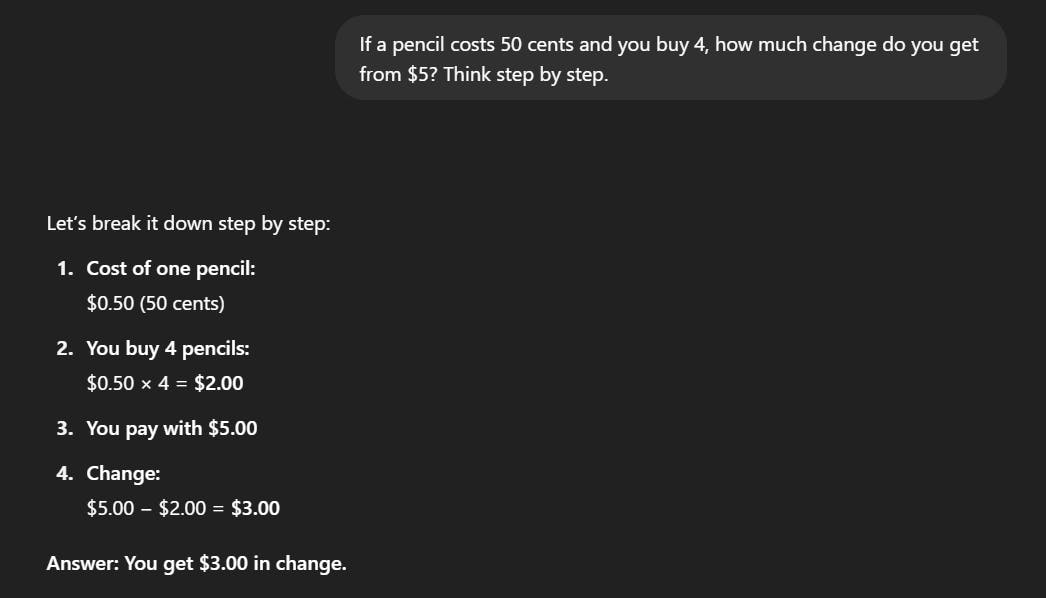

Chain-of-thought (CoT) prompting

Chain-of-thought prompting encourages the model to explain its reasoning step by step before answering. It is best for math, logic problems, or tasks that require multiple steps.

ReAct (reasoning + acting)

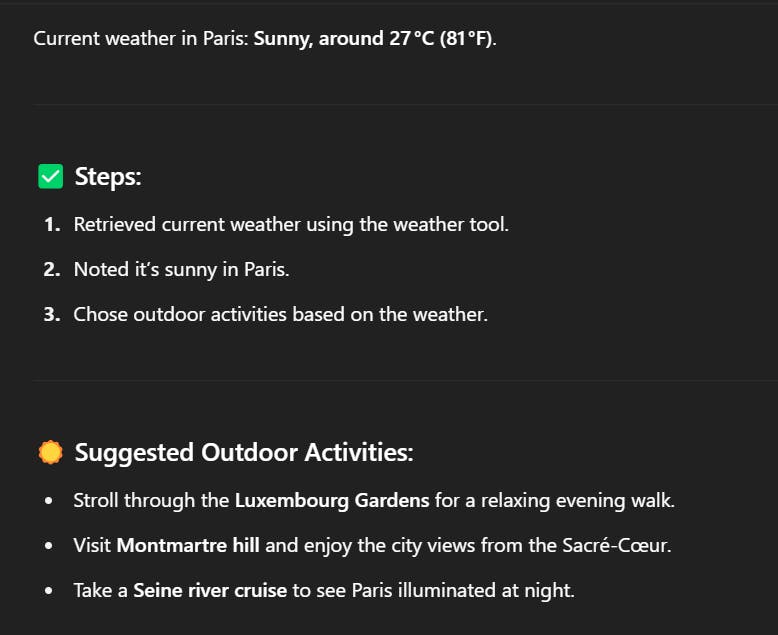

ReAct prompting combines reasoning (thinking through the problem) with acting (using tools, APIs, or retrieval to get more information).

The model reasons about a problem, takes action to fetch external data (e.g., from a database or API), then continues reasoning based on the results.

Here’s an example ReAct prompt: What's the current weather in Paris? If it's raining, suggest three indoor activities. If it's sunny, suggest three outdoor activities. Show your steps.

ReAct is best used in systems where the AI can interact with external tools, like coding assistants, chatbots, or decision-making agents.

Self-consistency

Self-consistency means running the same prompt multiple times and comparing the outputs to find the most consistent or likely correct answer. Because AI outputs can vary with each run (due to randomness or sampling), generating multiple completions and picking the best one helps.

Tree-of-thought

Tree-of-thought prompting breaks a problem into a branching series of ideas or possibilities instead of a straight line of reasoning. It allows the LLM to explore multiple angles or solutions (like a decision tree) before selecting the best one.

For example, when solving an issue, you could ask the AI model: “Generate multiple possible steps for solving this problem. Evaluate the pros and cons of each one and choose the most effective path.”

This technique is great for brainstorming, planning, coding strategies, or situations where there isn’t one correct answer.

First time here? Discover what Prismic can do!

👋 Meet Prismic, your solution for creating performant websites! Developers, build with your preferred tech stack and deliver a visual page builder to marketers so they can quickly create on-brand pages independently!

Prompt engineering best practices and tips

Be specific and clear

Vague instructions lead to vague results. Tell the model exactly what you want it to do, and avoid assuming it will fill in gaps the way a human would.

Use roleplay and instructions when needed

If you want the AI to adopt a tone, persona, or behavior, say so. Role-based instructions like “You are a senior JavaScript instructor with 15 years of experience” can help determine the tone and level of expertise.

Break tasks into steps

When you give an AI model a broad or complex instruction, it can be too much for it to handle, leading to inconsistent outputs. Breaking the task down makes it easier for the model to stay focused and accurate.

This principle is particularly useful when tackling technical or programming-related problems. Asking a model to “Build a full-featured to-do app with login, user management, and real-time syncing” is likely to fail or return low-quality results. It’s too much at once.

Instead, break the project into smaller instructions that can be handled one step at a time:

When to keep it simple vs. complex

Simple prompts are easier to write and test, but complex ones offer more control. If you're exploring or brainstorming, short prompts are fine. But for precise results, especially in production, more structure is better.

You can start simple, then add instructions, examples, and constraints as needed.

Have prompt templates

Creating reusable prompt templates can be helpful if you find yourself repeating similar tasks. These templates will save time and keep your results consistent.

Templates also make it easy to scale tasks since you won’t need to start from scratch each time. You can adjust the wording, examples, or format without having to rethink the entire structure.

Add clear constraints like: do not hallucinate

LLMs tend to make educated guesses when they’re unsure about something. This behavior is known as hallucination. While the guess might sound confident, it could be completely incorrect.

That behavior might be fine for low-risk tasks like brainstorming ideas or creative writing. But it can be risky in technical, legal, or financial contexts where accuracy matters.

One proven way to reduce hallucinations is to explicitly tell the model not to hallucinate. It sounds simple, but it works. Adding clear constraints like “Only include verifiable facts” or “Don’t make up answers” encourages the model to stick to what it’s confident about.

Improving AI responses with prompt debugging

Even well-written prompts won’t always return the result you expect. That’s where prompt debugging comes in. Just like debugging code, prompt debugging means identifying what’s going wrong in the output and adjusting your input to fix it.

Start by looking closely at the response. Is it too vague? Too long? Off-topic? Missing a format? Then go back to your prompt and ask:

- Did I give enough context?

- Was the instruction too broad or unclear?

- Did I forget to set constraints or define the tone?

The first version of your prompt doesn’t have to be the final one. Try variations, test different angles, and compare results. Over time, you’ll learn what works best for different tasks, and your prompts will become more effective.

Why the AI model you use matters

So far, we’ve covered how to write good prompts. But one of the most critical factors, often more important than the prompt itself, is the AI model you’re using.

Different models are trained with different strengths, data sources, and design goals. Some are great for reasoning. Others excel at coding or summarizing.

There are more AI models on the market than we can cover here. However, let’s review some models from three top providers and see what each one does best.

OpenAI’s models

OpenAI gives you access to different models for different needs. If you're handling everyday tasks that don’t require deep reasoning, the GPT-4.1 model is a solid general-purpose option.

When your work involves complex logic, scientific problems, or advanced reasoning, consider one of the models in the o-series. The o3 model helps you tackle high-level thinking and problem-solving. If you need even more reliability, the o3-pro gives the model more time to think through its answers, but it comes at a higher cost.

Looking for strong reasoning without breaking your budget? Try the o4-mini. It performs close to o3, but at a lower price, making it a smart choice for logic-heavy work.

Anthropic’s Claude models

If you're working on tasks that involve reasoning, coding, or AI agents, Anthropic’s Claude models are worth exploring.

Claude Opus 4 is built for advanced use cases where you need deep thinking, memory, and flexibility. You can use it for long-form content, big codebases, or building autonomous agents. With its 200K token context window, you can input large documents or long conversations without worrying about losing context.

Need something faster and more affordable? Claude Sonnet 4 offers a good mix of speed, intelligence, and cost-efficiency. It’s five times cheaper than Opus 4 and improves on the earlier Claude 3.5 Sonnet in reasoning, accuracy, and formatting. You’ll find it helpful for chatbots, content reviews, or workflows that need smart but fast responses.

Google’s Gemini models

Gemini 2.5 Pro is Google’s most advanced thinking model. Use it when you're working on complex code, data analysis, or multimodal reasoning. It’s designed for accuracy and does best when your tasks need detailed understanding and carefully thought-out answers.

Gemini 2.5 Flash is a faster, budget-friendly option. It’s great for building apps like chatbots or assistants that need quick outputs, especially for real-time interactions, content creation, or summarizing large inputs.

Final thoughts

Prompt engineering is a practical skill that helps you get better results from AI tools. By learning how to give clear instructions, provide context, set constraints, and choose the right format, you can improve the quality, accuracy, and usefulness of AI responses.

Whether you're writing, coding, building apps, or just experimenting, prompt engineering gives you more control over what the AI does. As AI continues to grow in both capability and use, knowing how to prompt well will become an essential part of working with these tools effectively. Start simple, test often, and improve as you go.

What interesting quirks have you noticed while working with AI tools? Maybe an output that went completely off the rails and shocked you, or a prompt that got surprisingly good results?

Share your experience; drop a comment below! 👇